Understanding page speed reports’ various terms and metrics can be challenging. To help you navigate these technical terms easily, we have compiled an extensive glossary of over 50 web performance terms. This detailed guide will provide clear definitions and explanations for each term, ensuring you thoroughly understand web performance terminology. Whether you’re optimizing your site for better load times or improving user experience, this glossary will serve as a valuable resource, eliminating any guesswork and enhancing your knowledge of web performance concepts.

Key Jargon in Website Page Speed:

AJAX

AJAX (Asynchronous JavaScript and XML) is a technique for sending and receiving data from a server asynchronously. This allows web pages to get updated dynamically without needing a full page reload. This method enables specific web page parts to be refreshed independently, creating a faster and more dynamic user experience. By using AJAX, web applications can retrieve data from the server in the background, updating the user interface seamlessly and improving overall performance.

Application Performance Monitoring (APM)

Application Performance Monitoring (APM) involves tools and processes to track the performance, consistency, and availability of web applications. APM focuses on several key areas: user experience, transaction profiling, application code-level diagnostics, deep-dive analytics, and infrastructure visibility. The primary goal of APM is to ensure that applications perform optimally and provide a positive user experience. It is important to note that APM (Application Performance Monitoring) is a component of the broad strategy known as Application Performance Management, which aims for comprehensive performance excellence.

Asynchronous/Synchronous Loading

- Asynchronous Loading: This technique allows multiple scripts to load simultaneously rather than in a specific order. The benefit of asynchronous loading is that it can significantly speed up page load times. However, it may lead to technical issues such as content flickering because the scripts are not guaranteed to load in a specific sequence.

- Synchronous Loading: With synchronous loading, a web page’s resources are loaded in a specific order. While this approach can lead to slower load times, it ensures that scripts are executed in the correct order, preventing errors and providing a smoother, more predictable user experience.

Browser Cache

The Central Processing Unit (CPU), often called the “processor,” is the component of a computer responsible for processing and executing instructions. The CPU consists of two primary parts: the control and arithmetic logic units (ALU). The control unit directs the operation of processor, fetching and interpreting instructions from memory, while the ALU performs arithmetic and logical operations. The CPU plays a key role in the performance of a computer, as it handles all the computations and data processing tasks required by software applications.

CSS

Cascading Style Sheets (CSS) are files that describe how HTML elements should be displayed on the web pages. CSS defines a website’s visual presentation, including aspects such as colors, fonts, layouts, and overall design. It allows the developers to create visually appealing and responsive websites which adapt to different screen sizes and devices. If HTML provides the structure of a webpage (the skeleton), CSS specifies its appearance (the skin). By separating content from design, CSS makes it easy to maintain and update the look and feel of a website.

CSS Object Model

The CSS Object Model (CSSOM) encompasses all the styles applied to a web page or, put differently, provides instructions on how to style the Document Object Model (DOM). It’s crucial to note that CSS is render-blocking, which means the content cannot be displayed until the CSSOM is entirely constructed. Therefore, optimizing by removing unnecessary CSS rules can significantly enhance the page load speed.

Cache Invalidation

Cache invalidation refers to removing or replacing entries in a cache. For example, NitroPack offers cache invalidation services where cached content is marked as outdated but continues to be served until newly optimized content becomes available. This approach is particularly beneficial during high-traffic periods or marketing campaigns.

Chrome UX Report

The Chrome UX Report (CrUX) is a publicly accessible repository containing accurate user performance data from millions of websites. This data allows web developers and site owners to gain insights into how Chrome browser users experience their websites and those of their competitors.

Client-Side Rendering

Client-side rendering occurs when a website’s JavaScript code is executed within the user’s web browser rather than on the server (server-side rendering). In this scenario, the server delivers the basic structure of the web page while the client-side JavaScript handles the rendering of additional content and interactivity. This approach can lead to faster initial page loads and more dynamic user experiences, especially with modern web applications.

Compression

Compression, known as “data compression,” is the process of modifying or encoding the structure of data bits to reduce their size on disk. The primary benefits of data compression include faster data transmission times and reduced costs associated with storage hardware and bandwidth.

Concatenation

Concatenation is the process of combining similar website resources into a single file. For example, multiple CSS or JavaScript files can be merged into a larger CSS or JavaScript file. This practice enables the browser to locate and download all necessary resources more efficiently, thereby speeding up the rendering of web pages.

Important Note: Combining different JavaScript files can be challenging and potentially cause website issues. Scripts can originate from various sources, such as code, frameworks, themes, or plugins, and they often depend on each other to function correctly. Ensuring scripts run in the correct order after concatenation is crucial to prevent unintended errors.

Core Web Vitals

Core Web Vitals (CWV) encompass three key metrics: CLS (Cumulative Layout Shift), LCP (Largest Contentful Paint), and FID (First Input Delay). These metrics measure the load time, responsiveness, and visual stability of a web page, respectively. Since June 2021, Core Web Vitals have been a significant ranking factor for Google.

Critical CSS

Critical CSS, also known as critical path CSS, refers to the CSS styles applied to the above-the-fold content of a web page. It specifically targets the CSS required for the initial view of the page, ensuring that it loads quickly and optimizes user experience by presenting content promptly upon page load.

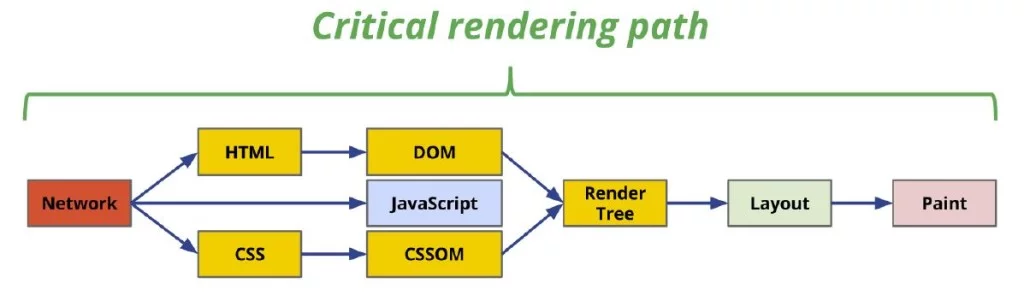

Critical Rendering Path

The critical rendering path describes the sequence of steps a browser follows to render a web page from the received HTML, CSS, and JavaScript into pixels on the screen. These steps include building the Document Object Model (DOM), constructing the CSS Object Model (CSSOM), combining them into a Render Tree, performing Layout calculations to determine the exact position and size of each element, and finally, painting the pixels onto the screen. Optimizing the critical rendering path is essential for improving the time it takes for the initial content to appear on the screen, known as the Time to First Render (TTFR). This optimization is crucial for enhancing user experience by ensuring faster page loading times.

Critical Request Chains

Critical Request Chains refer to a sequence of interdependent network requests that are crucial for rendering a web page. These requests typically involve fetching resources such as HTML, CSS, JavaScript, images, and other assets necessary for displaying the page content. The length of these chains and the size of the downloaded resources significantly impact the page loading performance. Longer chains or larger file sizes can lead to slower load times and delay the rendering of the page content, thereby affecting user experience negatively.

Cumulative Layout Shift

Cumulative Layout Shift is a metric that quantifies how much the content of a webpage shifts unexpectedly during its loading and interaction phases. These shifts occur when elements on the page change position without user input, often due to late-loading images, ads, or dynamically injected content. CLS measures the cumulative impact of these layout shifts throughout the page’s lifespan. Minimizing CLS is critical for providing a stable and predictable user experience, as unexpected layout changes can disrupt users and lead to accidental clicks or navigations.

DOM (Document Object Model)

The Document Object Model is an application programming interface (API) for HTML and XML documents. It indicates the structure of a webpage as a tree, where every branch ends in a node that contains objects representing elements on the page. This structured representation allows programs to dynamically access and manipulate the content, structure, and style of the document using DOM methods. Essentially, the DOM enables developers to interact with web pages programmatically, making changes to elements such as text, attributes, and styles in response to user actions or other events.

Edge Computing

Edge computing, or edge, is a networking approach designed to minimize latency and bandwidth usage by processing data closer to where it is generated or consumed. Rather than relying solely on the centralized cloud servers, edge computing distributes computational processes to local edge devices, such as IoT devices, edge servers, or even end-user devices. By processing data locally rather than sending it to distant data centers, edge computing reduces the time and bandwidth required for data to travel across networks. This approach is beneficial for applications that require real-time data processing and low-latency responses.

ETag (Entity Tag)

ETag, short for Entity Tag, is a feature of the HTTP protocol used for web caching. It is an identifier assigned by a web server to uniquely identify a specific version of a resource, such as a file or a webpage. When a client requests a resource, the ETag is sent along with the request. If the resource has not been modified since the ETag was generated, the server can respond with a 304 status code (Not Modified), indicating the client’s cached copy is still valid. This mechanism helps to reduce bandwidth usage and improve performance by allowing browsers and caches to store and reuse previously fetched resources efficiently.

Field Data (Real User Monitoring)

Field data, also known as Real User Monitoring (RUM) or Real User Metrics (RUM), provides insights into how real users experience a website in their natural environments. Unlike lab data collected in controlled testing environments, field data reflects the actual conditions and behaviors of users, including factors like network conditions, device types, and geographic locations. Field data is considered more reliable than lab data for assessing user experience because it captures real-world performance metrics that directly impact user satisfaction and engagement on a website.

First Contentful Paint (FCP)

First Contentful Paint (FCP) is a user-centric performance metric that measures the time from navigation to when the browser first renders any content on the screen, such as text or images, from the DOM. FCP indicates when users first perceive that a webpage is loading and displaying content. According to best practices recommended by tools like PageSpeed Insights, FCP should ideally occur within the first 2 seconds after navigation to ensure a fast and responsive user experience.

First Input Delay (FID)

First Input Delay (FID) is a crucial user-centric performance metric that evaluates the responsiveness of a web page. It measures the delay between the user’s first interaction with the page, such as clicking a link or tapping a button, and browser’s response to that interaction. FID provides insights into how quickly the page reacts to user input, which directly impacts user experience.

Previously part of Google’s Core Web Vitals, FID was replaced with Interaction to Next Paint (INP) after March 12, 2024. A low FID score indicates that users experience minimal delay when interacting with the website, contributing to a smooth and more responsive browsing experience.

First Meaningful Paint (FMP)

First Meaningful Paint (FMP) is a performance metric that signifies when the primary content of a web page becomes visually available to users. It measures the time from when a user initiates the page load to when the most relevant above-the-fold content is rendered on the screen. FMP marks the point at which users can start consuming meaningful content, such as text and images, without waiting for the entire page to load.

FMP is essential for assessing perceived loading speed and user engagement. Websites aiming for optimal user experience strive to achieve a fast FMP, ensuring that critical content is displayed promptly to users upon page load.

Front-end Optimization (FEO)

Front-end Optimization (FEO) encompasses a set of techniques aimed at improving the performance of web pages directly within the user’s browser. FEO focuses on optimizing how content is delivered and displayed, thereby enhancing user interaction and experience.

Key practices in FEO include:

- Image compression: Reducing the size of images to minimize load times.

- Script concatenation: Combining multiple JavaScript or CSS files into a single file to decrease the number of HTTP requests.

- Minification: Removing unnecessary characters and spaces from code to reduce file size.

- Removing unused CSS: Eliminating CSS rules that are not applied to any elements on the page.

The primary goals of FEO are to improve page load times, reduce the number of requests needed for a page to fully render, and optimize the overall user experience by ensuring swift interaction and content delivery.

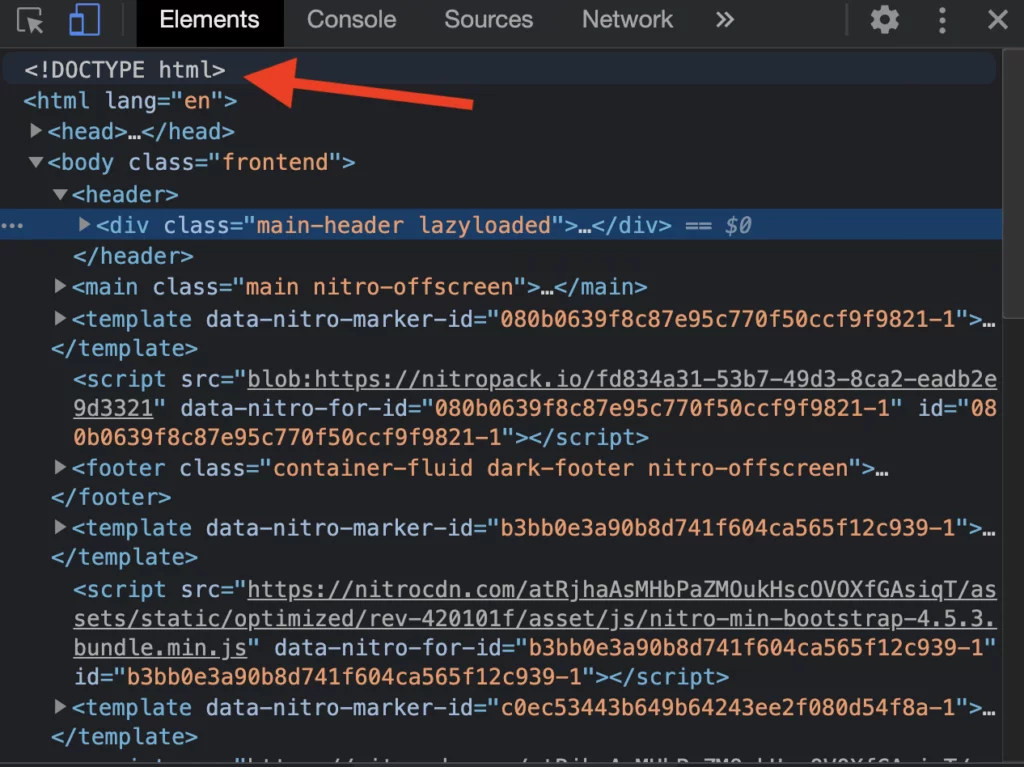

HTML (Hypertext Markup Language)

HTML is the standard markup language used to create and structure content on the World Wide Web. It comprises of a set of tags and attributes that define the structure of web pages, including text, images, links, and other multimedia elements. Browsers interpret HTML documents to render web pages according to the specified content and formatting instructions.

Developers use HTML to organize content into paragraphs, headings, lists, tables, forms, and more, enabling browsers to display information in a structured and accessible manner. HTML documents can be inspected and modified using browser developer tools, allowing developers to view the underlying structure and attributes of web elements.

HTTP (HyperText Transfer Protocol)

HTTP (HyperText Transfer Protocol) is the key protocol used for transferring data over the Internet. It defines a set of rules and conventions for how web browsers and servers communicate with each other to request and deliver web resources, such as HTML pages, images, stylesheets, and scripts.

HTTP operates as a request-response protocol, where a client (mostly a web browser) sends a request to a server for a specific resource, and the server responds with the requested data. HTTP forms the backbone of the WWW, allowing users to access and interact with the web content seamlessly across different devices and platforms.

HTTP Caching

HTTP caching is a mechanism that improves web performance by temporarily storing (caching) previously requested web resources on the client side (e.g., in the browser) or intermediary servers (e.g., proxies and CDNs). By caching frequently accessed resources such as HTML pages, images, stylesheets, and JavaScript files, HTTP caching reduces the need for repeated downloads from the server.

When a resource is requested, the browser or proxy server checks if a cached copy exists and is still valid based on caching headers like ETag and Cache-Control. If the resource is cached and valid, it can be retrieved more quickly, significantly reducing page load times and conserving bandwidth. HTTP caching plays a crucial role in optimizing web performance and improving user experience by delivering content faster to users.

Understanding HTTP Requests: Ways to Monitor

HTTP Header

The HTTP Header contains essential information regarding the HTTP request or response and the body associated with it. Headers can be categorized based on their context:

- Request headers: These headers provide information about the resource being fetched or the client making the request.

- Response headers: These headers include additional information about the requested resource.

- Representation headers: These headers pertain to details about the body of the resource, such as its encoding, compression, language, and location. Notable representation headers include Content-Type, Content-Encoding, Content-Language, and Content-Location.

HTTP headers play a critical role in facilitating communication between clients (like web browsers) and servers. They convey necessary metadata that helps browsers interpret and process the content appropriately.

Image Optimization

Did you know that images often constitute a significant portion of a webpage’s total weight? Image optimization is a strategic process aimed at delivering high-quality visuals in the optimal format, size, dimensions, and resolution while minimizing file size.

Efficient image optimization positively impacts a website’s overall performance by:

- Reducing page weight: Ensuring faster load times and improved user experience.

- Optimizing visual quality: Maintaining image clarity and fidelity while minimizing file size.

- Enhancing bandwidth efficiency: Decreasing data transfer requirements without compromising image quality.

Image Compression

Image compression is a specific technique within image optimization that reduces the file size of images using various algorithms. By minimizing redundancy and encoding data more efficiently, compression enhances storage capacity and accelerates transmission speeds across networks.

There are two main types of image compression:

- Lossy compression: Sacrifices some image quality for significant file size reduction. It’s suitable for photographs and images where slight quality loss is acceptable.

- Lossless compression: Reduces the file size without compromising image quality. It’s ideal for graphics, logos, and illustrations where maintaining precise detail is critical.

JavaScript

JavaScript is a versatile programming language used to create dynamic and interactive elements on websites. It empowers developers to manipulate webpage content in real time, enabling features such as animations, form validations, interactive maps, and more.

While JavaScript enhances user engagement and functionality, improper implementation or excessive use can impact performance:

- Search engine accessibility: JavaScript can make it challenging for search engine bots to index content embedded in dynamic elements.

- Page load times: Excessive JavaScript execution can prolong page load times, affecting user experience on slower connections.

Lab Data

Lab data refers to performance metrics collected under controlled conditions using specific devices and network configurations. Unlike real-world scenarios, lab data provides insights into how a webpage performs under idealized conditions, aiding in performance analysis and debugging.

While valuable for identifying technical issues, lab data may not fully reflect actual user experiences across diverse devices and network environments. Therefore, it’s essential to complement lab data with real user monitoring (RUM) to comprehensively understand website performance.

Largest Contentful Paint (LCP)

Largest Contentful Paint measures the time taken for the largest content element within the viewport to render on the screen during page load. It signifies when the primary content, such as images or text blocks, becomes visually available to users.

Aiming for an LCP of under 2.5 seconds, as PageSpeed Insights recommends, ensures prompt loading of critical webpage content. Optimizing for LCP improves perceived loading speed and enhances user engagement by minimizing visual delays.

Layout

During the layout stage of webpage rendering, the browser calculates the exact size, position, and arrangement of every visible element based on their respective styles and properties. This process determines how elements are displayed on the screen relative to each other and the overall page structure.

Efficient layout calculation is crucial for optimizing rendering performance and ensuring consistent presentation across different devices and screen sizes.

Lazy Loading

Lazy loading is a performance optimization technique which defers the loading of non-critical resources (such as images, CSS, and JavaScript) until needed. By prioritizing the loading of essential content first, lazy loading reduces the initial page load times and improves overall performance.

Implementing lazy loading helps streamline the critical rendering path, enhancing user experience by delivering faster initial page loads and conserving bandwidth.

Minification

Minification involves the process that removes unnecessary characters, such as comments, whitespace, and line breaks, from source code files (HTML, CSS, JavaScript) without altering their functionality. This reduction in file size improves download speeds and enhances webpage performance by minimizing data transfer requirements.

By optimizing code through minification, developers can achieve faster loading times and improve the efficiency of web applications and sites.

Next-Gen Image Formats

Next-Gen Image Formats (JPEG 2000, JPEG XR, and WebP) represent modern file types that provide superior compression and quality characteristics as compared to traditional formats. By adopting these formats, you can significantly reduce file sizes while maintaining and even enhancing image quality.

Paint

Paint is the final stage in any webpage’s critical rendering path. After the browser constructs the render tree and performs layout calculations, it proceeds to paint pixels on the screen, rendering the page’s visual content.

Preloading

Preloading instructs the browser to download and cache critical resources, such as scripts or stylesheets, as early as possible during page load. This prioritized download helps expedite the rendering process by ensuring essential resources are readily available.

Prefetching

Prefetching involves proactively loading resources before they are needed, aiming to reduce latency and improve user experience. Unlike preloading, prefetching downloads resources with low priority to avoid interference with more urgent resource requests.

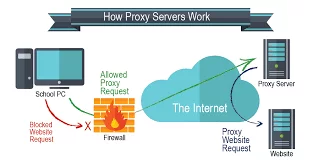

Proxy Server

A proxy server, known as a forward proxy or web proxy, acts as an intermediary between client computers and web servers. It intercepts client requests and forwards it to the appropriate web servers on behalf of the clients, ensuring anonymity, bypassing restrictions, and enhancing security.

Redirect Chain

A Redirect Chain occurs when a webpage redirects to another page that subsequently redirects again. These sequences of redirects can impact website loading speed and should be minimized to optimize the user experience and search engine performance.

Render Tree

The render tree is a crucial element in webpage rendering, formed by combining the Document Object Model (DOM) and the CSS Object Model (CSSOM). It represents the structure and style information of all visible content on the screen, facilitating the browser’s rendering process.

Render-Blocking Resources

Render-blocking resources are essential static files, such as CSS and JavaScript, necessary for the initial rendering of a webpage. When encountered, these resources prevent the browser from continuing to load other page elements until they are fully processed, potentially delaying page display.

Reverse Proxy

A reverse proxy is a server positioned in front of one or more web servers, intercepting client requests and routing them to appropriate backend servers. Unlike forward proxies, which serve client requests, reverse proxies are used for load balancing, caching, SSL encryption, and protecting web servers from malicious attacks.

Server Cache

Server cache differs from browser cache in terms of where data is stored. While browser cache stores data on the user’s local computer, server cache stores data on a server. There are various types of server caching techniques aimed at optimizing performance and reducing load times:

- Object Caching: This involves caching database queries or frequently accessed data objects in memory to reduce the need for repeated database calls, thereby improving response times.

- CDN Caching: Content Delivery Network (CDN) caching involves storing cached content across a network of distributed servers located worldwide. This allows the users to access the content from a server closest to the geographical location, improving delivery speed and reducing latency.

- Opcode Caching: Opcode caching is specific to server-side scripting languages like PHP. It involves caching the compiled bytecode of PHP scripts in memory to avoid the overhead of parsing and compiling PHP code on every request.

- Page Caching: This caches entire web pages in memory or disk storage, enabling the server to serve pre-rendered HTML pages quickly without re-executing the entire page generation process.

Server caching mechanisms are crucial for optimizing web performance by reducing server load, improving response times, and enhancing overall user experience.

Server-Side Rendering (SSR)

Server-side rendering (SSR) is a technique where the webpages are rendered on the server and sent to the client’s browser as fully rendered HTML documents. This contrasts with client-side rendering (CSR), where web pages are rendered in the browser using JavaScript after receiving raw HTML content from the server.

SSR improves website performance by delivering pre-rendered HTML to users quickly, as the browser only needs to fetch and display content and JavaScript rather than rendering it from scratch. This approach is beneficial for content-heavy websites and can enhance SEO by ensuring that search engine crawlers receive fully rendered content.

Service Level Agreement (SLA)

A Service Level Agreement is a contractual agreement between the service provider and a user that defines the level of service expected during a specified period. SLAs are commonly used in cloud services, hosting, CDN services, and other IT-related services. Key aspects typically covered in an SLA include:

- Performance Metrics: Specifies parameters such as bandwidth availability, uptime percentage, and response times for routine and ad hoc queries.

- Support: Defines response times and procedures for handling and resolving service issues, such as network outages or hardware failures.

- Penalties and Remedies: Outlines consequences for failing to meet SLA commitments, including potential compensation or service credits to users affected by service disruptions.

SLAs provide clarity and assurance regarding service expectations, performance standards, and the responsibilities of both parties involved.

Speed Index

Speed Index measures how quickly the content is visually displayed during the loading of a web page. It quantifies the perceived loading speed of a page by evaluating how quickly visible parts of the page are rendered to the user. It is typically measured in milliseconds and varies based on factors like network conditions, device capabilities, and viewport size.

A lower Speed Index indicates faster-perceived page load times, contributing to improved user satisfaction and engagement.

Static Assets

Static Assets refer to non-changeable resources served to users without alteration by the server. These assets include JavaScript files, CSS stylesheets, images, fonts, and other files that do not require server-side processing before being delivered to the client’s browser.

Static assets are typically cached at various levels (browser cache, CDN cache, server cache) to optimize delivery speed and reduce bandwidth usage. They play a crucial role in web performance optimization by ensuring efficient and rapid delivery of essential resources to users.

Time To First Byte (TTFB)

Time To First Byte (TTFB) measures the duration, typically in milliseconds, from the moment a client’s browser sends HTTP request to the server until it receives the first byte of the server’s response. It is a critical metric in web performance analysis as it indicates how quickly a server responds to a request.

Importance of TTFB

- User Perception: TTFB influences user perception of website speed. A longer TTFB can make a site feel slow, even if subsequent content loads quickly.

- SEO Impact: Search engines consider TTFB when ranking pages. Faster TTFB contributes positively to SEO rankings.

- Server Performance: TTFB reflects server performance and can be optimized through server configurations, such as faster hosting, reducing server-side processing time, and efficient caching strategies.

Improving TTFB

- Optimize Server Configuration: Use faster servers, reduce server response times, and optimize database queries.

- Utilize Caching: Employ caching mechanisms like server-side caching, CDN caching, and opcode caching to serve content faster.

- Minimize Server Requests: Combine and minify CSS JavaScript files and reduce the volume of HTTP requests.

- Content Delivery Network (CDN): Use CDNs to serve content from servers which are closer to the users, reducing latency.

Time To Interactive (TTI)

Time To Interactive measures the time it takes for a web page to become fully interactive for users. A page is considered fully interactive when:

- Useful content Displayed: Content relevant to the user is displayed, typically measured by First Contentful Paint (FCP).

- Event Handlers Registered: Most user interface elements have registered event handlers for interactions.

- Responsive Interactions: The page responds to user interactions (e.g., clicks, taps) within 50 milliseconds.

Importance of TTI

- User Engagement: Faster TTI enhances user experience by allowing users to interact with the page sooner.

- Conversion Rates: Improved TTI can lead to higher conversion rates as users engage more readily with interactive elements.

- SEO Considerations: Google uses TTI as part of its Core Web Vitals metrics for ranking pages.

Total Blocking Time (TBT)

Total Blocking Time (TBT) measures the total duration, in milliseconds, during which Long Tasks (tasks that exceed 50 milliseconds) block the main thread and hinder page interactivity. TBT, along with Largest Contentful Paint (LCP), is crucial for evaluating a page’s usability and performance.

Impact of TBT

- Page Responsiveness: High TBT indicates that Long Tasks are delaying user interactions, negatively impacting user experience.

- Optimization Focus: Reducing TBT involves optimizing JavaScript execution, minimizing JavaScript file sizes, and deferring non-critical scripts.

User-Agent

A User-Agent is a string of text within the HTTP header that identifies the client’s browser, device, operating system, and other relevant information to the web server. Servers use User-Agent information to deliver optimized content and experiences based on the user’s device type and capabilities.

Waterfall Chart

A Waterfall Chart is a graphical representation that displays the chronological sequence of HTTP requests and responses initiated by a web page’s loading process. It provides detailed insights into each resource’s loading time, dependencies, and execution order, aiding in performance analysis and debugging.

Web Performance Optimization (WPO)

Web Performance Optimization (WPO) encompasses methodologies and techniques aimed at improving the speed of web applications. WPO focuses on optimizing page load times, reducing latency, and enhancing the overall user experience through various strategies such as code minification, image optimization, caching, and server-side optimizations.

WebFont

WebFont Loader is a JavaScript library that enhances control over how web fonts are loaded and displayed on a web page. It enables developers to manage font loading from multiple providers efficiently and provides standardized events for controlling the loading process, ensuring a smoother and more consistent font loading experience across different browsers and devices.

XMLHttpRequest (XHR)

XMLHttpRequest (XHR) is a JavaScript API that enables web browsers to send HTTP or HTTPS requests to a server and receive server responses. It plays a crucial role in enabling asynchronous communication between a web application and the server without requiring a full page reload. Here’s a detailed overview of XMLHttpRequest:

Key Features and Functionality:

- Asynchronous Requests: XHR allows requests to be made asynchronously. This means that the web page can continue to run and update content while waiting for the server’s response.

- Data Exchange: It facilitates the exchange of data between the client (browser) and server in various formats, including XML, JSON, HTML, or plain text.

- Partial Page Updates: XHR enables web pages to update content dynamically without reloading the whole page, which improves user experience by providing faster interactions and reducing bandwidth usage.

- Cross-Origin Requests: XHR supports making requests to different domains or origins, subject to the same-origin policy or CORS (Cross-Origin Resource Sharing) restrictions.

- Event-Driven Model: XHR uses an event-driven programming model, allowing developers to define callback functions that handle different stages of the request-response cycle, such as when data is received successfully or when an error occurs.

- Security Considerations: Careful handling of XHR requests is essential to prevent security vulnerabilities like cross-site scripting (XSS) attacks and cross-site request forgery (CSRF).

Typical Use Cases:

- Dynamic Content Updates: Websites use XHR to fetch new data from the server without refreshing the full page, such as updating comments on social media platforms or fetching new articles in news feeds.

- Form Submissions: XHR can handle form submissions asynchronously, providing immediate feedback to users while submitting form data to the server in the background.

- Real-Time Updates: Applications like chat rooms, stock tickers, or live scoreboards use XHR to fetch real-time data updates from the server and display them to users instantly.

Example Code Snippet:

javascript

Copy code

// Creating a new XMLHttpRequest object

var xhr = new XMLHttpRequest();

// Configuring the request

xhr.open(‘GET’, ‘https://api.example.com/data’, true);

// Setting up a callback function to handle response

xhr.onload = function() {

if (xhr.status >= 200 && xhr.status < 300) {

// Success! Parse response data

var response data = JSON.parse(xhr.responseText);

console.log(‘Response data:’, responseData);

} else {

// Error occurred

console.error(‘Request failed with status:’, xhr.status);

}

};

// Sending the request

xhr.send();

Best Practices for XHR Usage:

- Use Asynchronous Requests: Always set the third parameter of xhr.open() to true for asynchronous requests to avoid blocking the main thread.

- Handle Errors Gracefully: Implement error handling using xhr.onerror or xhr.onabort to manage network failures or request aborts.

- Consider Alternative APIs: For modern web applications, consider using fetch() API, which provides a simpler and more powerful way to make network requests with promises.