Navigating the intricate web of search engine optimization (SEO) can often feel like traversing a labyrinth, with pitfalls and challenges lurking at every turn. One such obstacle that frequently plagues website owners is the issue of missing content. In the vast expanse of the internet, where every morsel of information vies for attention, the risk of crucial content slipping through the cracks is a constant concern.

Imagine having meticulously crafted web pages, brimming with valuable insights and offerings, only to discover that they are nowhere to be found in search engine results. This scenario, unfortunately, is all too common, and its implications for online visibility and success cannot be overstated. Let’s learn more about missing content in the blog.

What Do You Mean by Missing Content?

In the past, SEO was straightforward, with websites featuring HTML that search engines could easily process. However, contemporary challenges often stem from heavy JavaScript sites that have more pages, like an online store. If Googlebot is limited to crawling only 100 pages on your site, yet you have 150 pages, some of your critical pages could remain uncrawled, leading to “missing content.”

When a webpage or section thereof is excluded from Google’s crawling process, it cannot be indexed or ranked. This typically occurs when Google exhausts the allocated crawl budget mid-crawl.

The crawl budget denotes the number of pages Googlebot explores on a site within a specific timeframe. If Googlebot can only crawl a limited number of pages, crucial content may go unexamined, resulting in gaps in content coverage. Additionally, if the omitted content is dynamic and includes various elements like links, images, and text, the crawl budget may be wasted, leading to incomplete pages that won’t be indexed.

Failure to capture all content during web crawls can significantly impact SEO effectiveness. Pages omitted from crawling remain invisible to search engines, diminishing their exposure to potential visitors and customers. In scenarios like e-commerce, overlooking newly added product listings in a crawl can lead to missed opportunities and revenue loss.

Strategies for Ensuring Comprehensive Web Crawls

Exploring effective methods to minimize the risk of content being overlooked in web crawls is crucial for optimizing SEO performance. Here, we explore these techniques in detail:

- Maintain Updated Sitemaps

A sitemap is a file containing a comprehensive list of all the web pages on your site, serving to inform search engines about the structure of your site’s content. Typically found at /sitemap.xml, a well-maintained and regularly updated XML sitemap plays a crucial role in enhancing your site’s crawlability. It ensures that new or modified pages are promptly discovered by search engine crawlers, reducing the likelihood of them being overlooked.

- Leverage Robots.txt Files

Located in the root directory of your website, Robots.txt files provide directives to web crawlers regarding which sections of your site they can or cannot access. By incorporating the “allow” directive into the robots.txt file, you can guide search engines on which pages to crawl. However, misusing Robots.txt files can result in crawlers overlooking crucial pages. Hence, it’s essential to exercise caution and judiciously implement Robots.txt directives.

- Streamline Site Architecture

When pages are buried deep within a website’s hierarchy, they may be overlooked by both users and web crawlers, reducing their visibility and impact. Simplifying URLs and reducing page depth are essential strategies to enhance navigation for both visitors and web crawlers. By simplifying URLs, you make them more user-friendly and easier for visitors to remember and share.

Avoid using complicated URLs like www.nestify.io/xyz/8352528/managedhosting. Clean URLs like www.nestify.io/managedhosting also provide clearer information about the content of the page, improving user experience and increasing the probability of clicks.

Additionally, straightforward URLs are easier for web crawlers to understand and interpret, making it simpler for them to index your site’s content accurately. This streamlines navigation, ensuring that important content is more easily accessible.

- Address Broken Links

Regularly identify and fix broken links to maintain smooth navigation for crawlers, preventing content from being missed due to disruptions in their path.

- Enhance Page Load Speed

Search engine users have limited tolerance when it comes to page loading times. Just as users quickly lose interest in slow-loading pages, search engine crawlers may also become impatient. If your pages take too long to load, crawlers may prematurely abandon their indexing process before fully exploring your site’s content. This could result in incomplete indexing, leaving significant portions of your site unindexed and potentially impacting your search engine rankings. Therefore, optimizing your page load times is crucial not only for improving user experience but also for ensuring that search engine crawlers can effectively index your site’s content.

- Strengthen Internal Linking

Effective internal linking simplifies navigation for both users and crawlers. A well-planned internal linking strategy can direct crawlers to all vital content on your site, minimizing the risk of any being overlooked.

- Ensure Proper Status Codes

It’s a common error to employ a 2xx (successful) status code for a 404 (not found) page. For example, if a user or crawler requests a nonexistent page and your server returns a 2xx status code alongside a 404 error page, search engines receive conflicting signals. This confusion may prompt them to persist in crawling and indexing the “missing” page due to the success code, squandering valuable crawl budget on nonexistent content.

- Mitigate Infinite Spaces

On a website, an infinite space might manifest as a calendar feature with a “next month” link, tempting web crawlers into an endless cycle of page discovery. This relentless pursuit can swiftly deplete your crawl budget. Within this loop, the crawler continually uncovers seemingly ‘new’ pages devoid of value to users or search engines. Consequently, crucial site areas might remain undiscovered.

To tackle this issue, employ the ‘nofollow’ attribute in meta tags to discourage crawler follow-through on certain links. Alternatively, utilize a ‘noindex’ meta tag on repetitive pages to prevent their indexing. Additionally, employ a canonical tag to specify the preferred page version, mitigating the impact of infinite spaces on crawl efficiency.

- Embrace Mobile-Friendly Design

With Google’s primary focus on mobile-first indexing, adopting a mobile-friendly or responsive website design can notably enhance crawlability. Ensuring your site performs effectively on mobile devices enhances accessibility for both users and web crawlers.

By incorporating these strategies, you can minimize the likelihood of content being missed during web crawls, thereby optimizing your site’s visibility and SEO performance.

5 Best Tools That Can Help Prevent Missing Content Problems

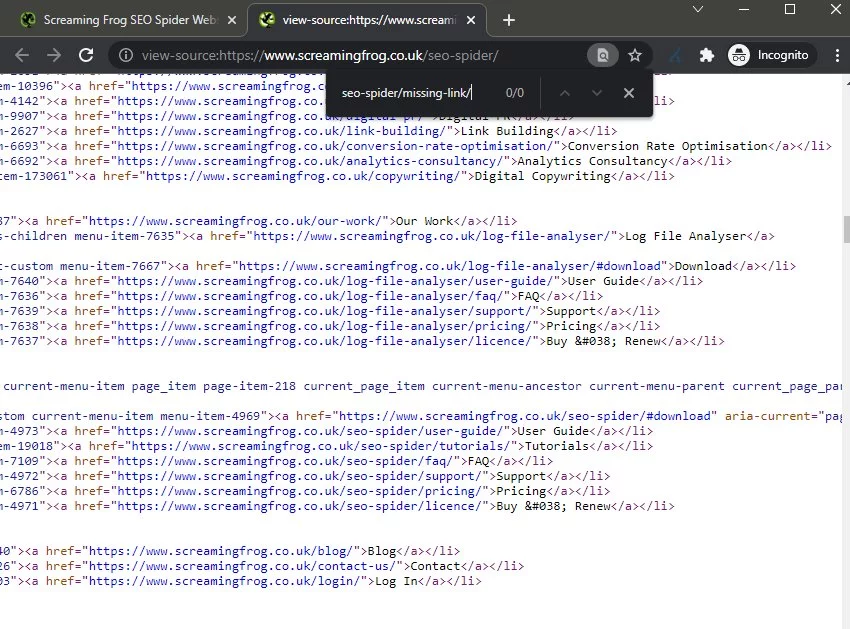

- Screaming Frog SEO Spider: This powerful desktop program crawls websites to analyze key SEO elements, including missing content. It provides insights into URLs, titles, meta descriptions, and more, helping to identify any overlooked or inaccessible content.

- Google Search Console: This free tool offers valuable information about a website’s performance in Google search results. It provides alerts about crawl errors and indexing issues, helping site owners identify and rectify missing content problems.

- Sitebulb: Sitebulb is another website crawler designed to identify SEO issues, including missing content. It provides comprehensive reports with actionable insights to improve website performance and ensure all content is properly indexed.

- DeepCrawl: DeepCrawl is a cloud-based web crawling tool that offers in-depth analysis of websites, highlighting areas where content may be missing or inaccessible to search engines. It provides detailed reports and recommendations for optimizing crawlability.

- Ahrefs: While primarily famed for its backlink analysis, Ahrefs also presents a Site Audit tool that helps identify technical SEO issues, including missing content. It provides insights into crawlability issues and offers suggestions for improvement.

By using these tools, website owners can effectively identify and address any missing content issues, ensuring their websites are fully optimized for search engine visibility and user experience.

Wrapping Up

Safeguarding against missing content is essential for maintaining a strong online presence and maximizing the capability of your website. By incorporating the strategies outlined in this guide, you can guarantee that your content remains visible and accessible to both web visitors and search engine crawlers. Remember, in the vast web world, every piece of content matters, and by taking proactive steps to prevent content from getting lost, you can strengthen your SEO performance and enhance the overall success of your online presence.

FAQs

Can missing content be fixed retroactively, or is prevention the only solution?

While prevention is key to avoiding missing content, it’s often possible to fix issues retroactively by addressing crawl errors, improving site structure, and implementing proper redirects for broken links. However, proactive measures to prevent missing content are generally more effective and less time-consuming in the long run.

Are there any specific strategies for preventing missing content in dynamic websites or those using JavaScript frameworks?

Yes, dynamic websites and those built with JavaScript frameworks may require additional considerations to ensure crawlability and indexing. Strategies such as server-side rendering (SSR), pre-rendering content, and using the ‘NoScript’ HTML tag can help make dynamic content more accessible to search engine crawlers. Additionally, ensuring proper use of canonical tags and structured data markup can aid in accurately indexing dynamic content.