Web scraping emerges as a transformative tool in lead generation, revolutionizing how businesses amass valuable customer information and contact details from the vast expanse of the internet. With unparalleled speed and efficiency, web scraping empowers businesses to expand their reach, enhance competitiveness, and optimize the precision of their targeted marketing initiatives. The process entails the swift and automated extraction of email addresses, phone numbers, and social media profiles, eliminating the need for laborious manual efforts. By embracing web scraping, businesses ensure a continuous influx of fresh and pertinent leads, positioning themselves strategically for proactive outreach. Possessing proficiency in web scraping becomes a pivotal skill for individuals aspiring not merely to exist but to thrive in their respective markets.

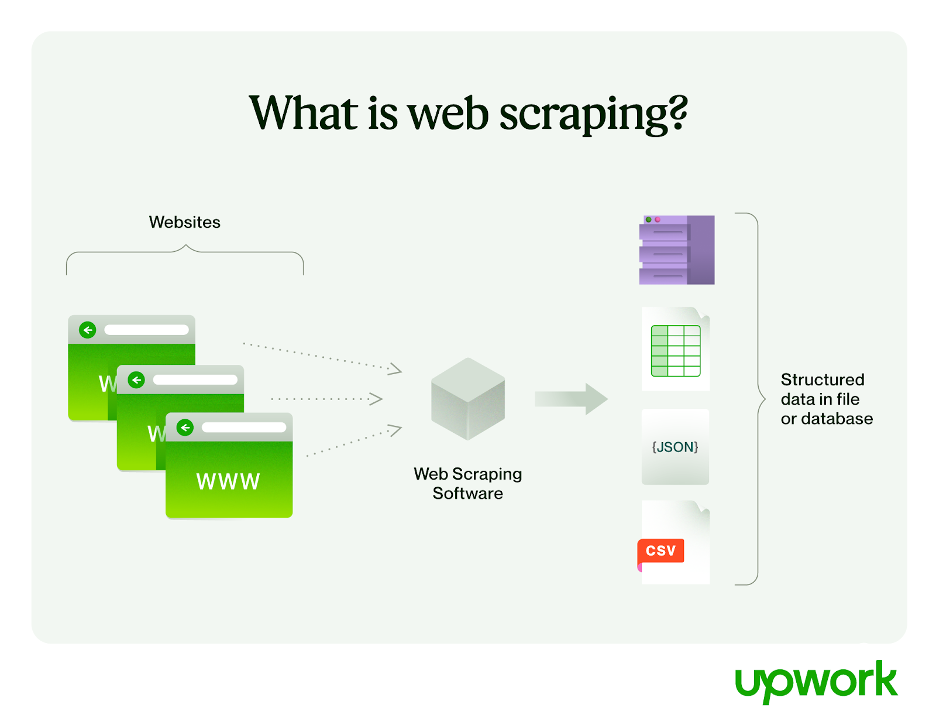

What is Web Scraping?

Web scraping is akin to deploying automated robots to gather data and content from various websites. In essence, it involves utilizing code to navigate the web and extract information from web pages in an automated fashion. This extracted data, often in HTML, XML, or other web languages, is then transformed into a more user-friendly format, such as CSV, Excel, or a database.

The utility of web scraping spans across a multitude of applications. The process typically unfolds in three integral steps:

- Crawling: The initial phase involves tools or bots traversing through diverse pages on the internet to identify relevant websites from which data needs to be harvested. This navigation is facilitated by leveraging URLs and sitemaps, allowing the crawler to pinpoint specific pages of interest.

- Scraping: Once the relevant pages are identified, the scraping tool comes into play, extracting the necessary data from these web pages. This extraction is commonly executed by parsing the HTML content of the web page and searching for specific data points predefined by the user.

- Data Extraction: The extracted data undergoes a series of processing steps. This includes cleaning the data, removing duplicates, and organizing it into a structured and usable format. The aim is to enhance the quality and reliability of the data, ensuring its accuracy. Depending on the intended application, The transformed data is suitable for analysis or storage.

Benefits of Web Scraping:

Web scraping has become an indispensable tool across various domains due to its versatility, enabling multiple applications. Some prominent uses include:

- Market Research: Businesses leverage web scraping to conduct comprehensive market research. This involves gathering competitor data, monitoring market trends, and assessing product demand. By automating the extraction of relevant information from diverse sources on the internet, companies can gain valuable insights to inform their strategic decisions.

- Lead Generation: In marketing, web scraping plays a crucial role. Marketers employ scraping techniques to extract contact information from websites, allowing them to compile extensive lists of potential customers. This streamlines the process and ensures that the generated leads are relevant and up-to-date.

- Price Monitoring: E-commerce entities utilize web scraping to monitor the online landscape for pricing information. Companies can stay informed about price changes, product availability, and promotional offers by scraping competitors’ websites. This enables them to adjust their real-time pricing strategies to remain competitive in the market.

- SEO Monitoring: Search Engine Optimization (SEO) professionals harness web scraping to monitor their websites’ performance on search engine results pages (SERPs). By scraping SERPs, SEO specialists can track rankings, assess visibility, and analyze the effectiveness of their optimization efforts. This data-driven approach helps them refine their strategies for better online visibility.

- Data for Machine Learning Models: Researchers and data scientists use web scraping to gather large and diverse datasets for training machine learning models. By extracting relevant data from various online sources, they can create robust datasets that contribute to developing and improving machine learning algorithms.

Strategies to Use Web Scraping in Lead Generation:

1. Identify the Right Sources:

The initial step in web scraping for lead generation involves meticulously identifying the most pertinent sources. The efficacy of this process hinges on pinpointing where the ideal customer base actively engages online. Depending on the industry, these sources could range from professional forums and social media platforms to industry-specific directories and even comment sections on relevant blogs.

Strategies for Source Identification:

- Competitor Analysis: Utilize tools such as SimilarWeb or Alexa to analyze your competitors comprehensively. Understanding the sources from which your competitors draw their online traffic provides valuable insights into potential leads congregating in those spaces. This intelligence guides your web scraping efforts toward platforms frequented by your target audience.

- Customer Surveys: Directly engaging with your existing customer base through surveys can yield invaluable insights. Please inquire about the online platforms they frequent for industry news, advice, or discussions. This direct feedback is a reliable guide for tailoring your web scraping efforts toward the media most relevant to your customer demographic.

- Social Listening: Leverage tools like BuzzSumo or Mention to engage in social listening. Monitoring discussions where your brand or relevant keywords are mentioned helps identify active communities interested in your sector. This proactive approach lets you discern emerging trends, pinpoint engaged audiences, and refine your web scraping strategy.

Learn about nurturing leads here.

2. Respect Legal Boundaries:

Navigating legal considerations in the dynamic landscape of web scraping is paramount due to variations in laws across countries and websites. A cautious approach is necessary to circumvent potential legal issues. This involves a comprehensive understanding of the legal framework surrounding data collection and a commitment to adhering to the terms of service outlined by the scraped platforms.

Legal Compliance and Ethical Considerations:

- Review Terms of Service: Before initiating any web scraping activities, a thorough review of the terms of service of the target website is crucial. Some platforms explicitly prohibit scraping in their words, making it imperative to respect these guidelines. Understanding the terms of service helps determine the permissibility of data collection and avoids legal repercussions.

- Comply with GDPR and Other Regulations: In collecting data from European individuals, strict compliance with the General Data Protection Regulation (GDPR) is essential. In many cases, GDPR mandates specific consent for data collection, emphasizing respecting individuals’ privacy rights. Additionally, awareness and adherence to other relevant regulations are imperative to ensure lawful and ethical data collection practices.

- Ethical Data Use: Ethical considerations are crucial in responsible web scraping beyond legal compliance. Evaluating the ethical implications of how scraped data is utilized is fundamental. Practices should prioritize respect for individual privacy, transparency in data collection methods, and fair use of the extracted information. Businesses can establish a positive reputation and build trust with their audience.

A landmark ruling by the U.S. Ninth Circuit Court has clarified that scraping publicly accessible data is generally legal. However, legal interpretations can vary, and staying informed about the legal landscape is an ongoing necessity in web scraping.

3. Use Advanced Tools and Techniques:

The landscape has evolved to utilize advanced tools and techniques in modern web scraping. This is crucial for handling dynamic websites, interpreting JavaScript-rendered content, and navigating intricate pagination or login forms.

Select the Right Tools for Advanced Scraping:

- Dynamic Content Handling: Tools like Selenium or Puppeteer have become indispensable for scraping websites heavily reliant on JavaScript for content rendering. These tools empower the scraper to interact with dynamic elements, ensuring comprehensive data extraction from websites with complex interactive features.

- Data Extraction Accuracy: Tools such as BeautifulSoup or Scrapy for Python are paramount to achieving precise data extraction. These tools provide robust parsing capabilities, enabling the scraper to identify and extract the specific data points required accurately. Python’s popularity in this domain is attributed to its less complex syntax and extensive libraries for web scraping.

- Avoiding Detection: Implementing strategies like rotating user agents and proxy servers is essential to operating stealthily and evading detection. These techniques mimic human behavior, mitigating the risk of IP bans or rate limits imposed by websites during scraping activities.

4. Focus on Data Quality:

Lead generation endeavors’ success hinges on the collected data’s quality. It is imperative to ensure that the data is accurate but also relevant and up-to-date for effective outreach.

Implement Data Cleaning and Validation:

- Duplicate Removal: Data cleaning tools or scripts are essential to streamline outreach efforts and prevent redundancy. These tools identify and remove duplicate entries from the dataset, enhancing the efficiency of the lead generation process.

- Validation Checks: Regular validation of email addresses and phone numbers is crucial for maintaining the integrity of the contact list. Tools like Hunter.io for email verification prove valuable in this context, ensuring that the contact information is accurate and reliable.

- Regular Updates: Recognizing the dynamic nature of the business landscape, where roles, companies, and contact information frequently change, it is imperative to implement a routine for periodic data refreshes. Regular updates ensure that the scraped data remains current and reflects the evolving business environment.

5. Monitor Competitors:

Incorporating web scraping into competitor monitoring practices provides invaluable insights into their online activities, marketing strategies, customer engagement, and product development. This surveillance serves as a strategic tool for refining lead-generation strategies by identifying market gaps and opportunities for differentiation.

Competitive Intelligence Gathering:

- Product Changes and Updates: Regular web scraping of competitor websites facilitates tracking new product launches, updates, or discontinued offerings. This real-time intelligence allows businesses to stay abreast of market trends and adjust their product roadmap accordingly, ensuring agility in response to evolving consumer demands.

- Pricing Strategies: By consistently monitoring competitors’ pricing models through web scraping, businesses can dynamically adjust their pricing strategies to remain competitive or effectively emphasize their unique value proposition.

- Customer Feedback: Scrutinizing review sites and social media mentions through web scraping provides valuable customer feedback on competitors’ products. Analyzing this feedback guides businesses in refining their offerings and tailoring customer service strategies to meet market expectations.

6. Optimize for Scale and Efficiency:

As web scraping initiatives expand, managing operational scale becomes crucial for sustained efficiency. Automation and cloud computing emerge as pivotal components, enhancing scalability and ensuring data collection efforts remain responsive to market dynamics.

Scale Your Web Scraping Operations:

- Automation: Utilize scripting and scheduling tools to automate the web scraping process. Automation reduces manual oversight, expedites data collection, and ensures a streamlined and efficient operation.

- Cloud-Based Solutions: Harness the power of cloud services for storage and processing capabilities. This approach enables businesses to manage large volumes of data without substantial investments in hardware, fostering a flexible and cost-effective infrastructure.

- Data Management: Implement robust database management systems to organize, store, and facilitate collecting data retrieval. Efficient data management is pivotal for unlocking the full potential of the leads generated through web scraping.

7. Integrate with CRM and Marketing Automation Tools:

The value of scraped leads materializes when seamlessly integrated into sales and marketing processes. Ensuring harmonious integration with Customer Relationship Management (CRM) and marketing automation tools enhances lead nurturing and conversion processes.

Seamless Data Integration:

- APIs: Utilize Application Programming Interfaces (APIs) to automate the smooth transfer of scraped data into CRM systems. This ensures that leads are readily accessible for sales outreach and targeted marketing campaigns.

- Custom Integration Solutions: In instances where direct integration through APIs isn’t feasible, consider developing custom scripts that periodically import scraped data into CRM platforms, ensuring continuity in the data flow.

- Data Format Standardization: Standardize the format of scraped data to align with the requirements of CRM and marketing tools. This reduces the need for manual data manipulation and expedites the integration process.

8. Conduct Ongoing Industry Research:

Maintaining a proactive approach to industry research is paramount in the ever-evolving market landscape, characterized by continual trends and consumer preference shifts. Web scraping emerges as a powerful tool for ongoing industry research, ensuring that business strategies remain aligned with the dynamic demands of the market.

Utilize Web Scraping for Market Insights:

- Trend Analysis: Employ web scraping techniques to extract insights from industry news sites, forums, and social media platforms. This facilitates the identification of emerging trends that can inform crucial aspects such as product development, marketing strategies, and content creation.

- Competitor Content Strategy: Analyze competitors’ blogs and social media content through web scraping to deeply understand their content strategy. This invaluable intelligence allows businesses to refine their content marketing efforts, ensuring alignment with the interests and needs of the target audience.

- Regulatory Changes: Stay abreast of industry regulatory changes by actively scraping government and regulatory websites. This proactive approach ensures that businesses remain compliant with evolving legal requirements and can promptly adapt to any shifts in the regulatory landscape.

9. Maintain Ethical Standards:

Ethics in web scraping extend beyond mere legal compliance, emphasizing the importance of maintaining high ethical standards to foster trust among potential leads and the broader online community.

Ethical Web Scraping Practices:

- Transparency: Practice transparency in data collection methods. Where possible, inform individuals about the data collected from them and provide options for opting out, establishing a foundation of trust.

- Minimize Impact: Design scraping activities to minimize the impact on the websites involved. This includes respecting robots.txt directives and avoiding excessive requests that could burden the website’s servers.

- Data Security: Implement robust data security measures to safeguard the collected information. This involves encrypting stored data and restricting access to sensitive information to authorized personnel only.

Conclusion:

Web scraping transcends mere data collection; it serves as a conduit for businesses to learn, connect with the market, and adapt to evolving dynamics. By enabling the identification of optimal leads, understanding competitors, and aligning with customer needs, web scraping becomes an integral catalyst for business growth and success in the online ecosystem.

FAQs on Web Scraping:

How can I get thousands of leads for free?

While there is no easy way to obtain thousands of leads for free, businesses can create valuable content, leverage social media platforms, and use their existing networks for introductions or recommendations.

How do I find SEO leads?

SEO leads can be found by using SEO tools for research, reaching out to prospects through personalized messages, and creating educational content such as webinars or podcasts.

What is a lead generator tool?

It is software that aids in finding, contacting, and qualifying leads through various channels, including web scraping, landing pages, chatbots, and events.

Are lead generators worth it?

Their worth depends on their effectiveness in generating leads, increasing conversion rates, and contributing to revenue growth. However, considerations such as cost and lead quality should also be considered.