In the process of making purchase decisions, we frequently turn to individuals with similar experiences, who have already bought the product in question. This prompts us to seek user reviews, with an emphasis on a sufficiently high average score, a substantial quantity of reviews, and a significant number of ratings.

Surprisingly, 95% of users depend on reviews to inform their decisions and gain insights into how well a product aligns with their requirements. Regrettably, the user experience (UX) design of reviews and ratings often proves to be more perplexing and exasperating than helpful. It’s time to address and rectify these issues.

Benefits of Product Reviews and Ratings:

When customers delve into product reviews, their objective goes beyond merely encountering a multitude of positive remarks. While positive reviews play a crucial role in establishing trust, users are equally concerned with ensuring that the product will cater to their specific needs. Several attributes are scrutinized by customers to validate the efficacy of a product:

- Product Quality Assurance: Users seek confirmation that the product lives up to its advertised high-quality standards. They want to ascertain that the actual performance aligns with the marketing claims.

- Fair Pricing: Customers aim to determine that they are not overpaying for the product. They look for reviews that affirm the fairness and acceptability of the pricing in relation to the product’s features and benefits.

- Relevance to Personal Needs: Customers want assurance that the product they are considering addresses their specific needs effectively. Reviews that highlight similar use cases or requirements are valuable in this regard.

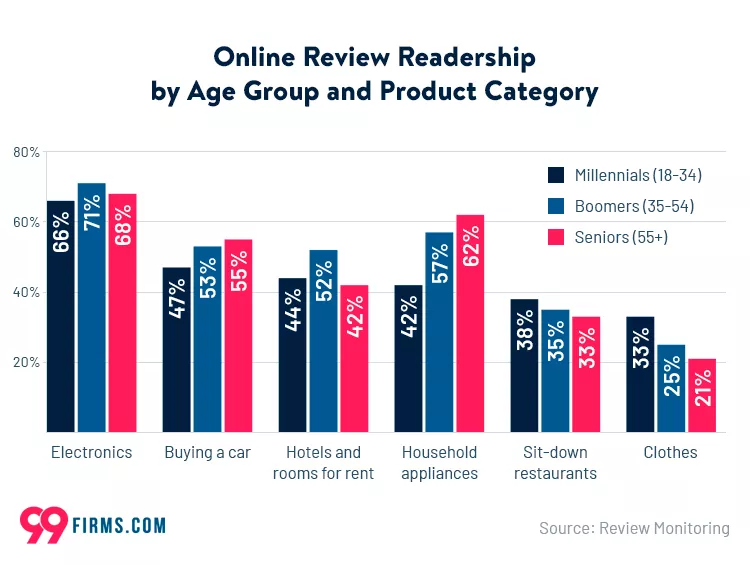

- Peer Satisfaction: Users often look for feedback from individuals similar to them in terms of age, experience, or specific needs. Knowing that people with comparable characteristics found satisfaction with the product lends credibility.

- Attention to Detail: Customers want to ensure they haven’t overlooked any critical details when making a purchase. Reviews that mention potential pitfalls, such as missing accessories or variations in sizing, help users make informed decisions.

- Risk Management: Customers evaluate the risk associated with their purchase. This includes examining the ease of return processes, the simplicity of cancellation, and the presence of customer-friendly policies such as a 30-day money-back guarantee.

- Customer Service and Integrity: Customers seek insights into the worst-case scenarios that could arise post-purchase. This involves evaluating the accessibility and responsiveness of customer service, ensuring it’s not hard to reach. Additionally, customers may be cautious about potential fraudulent activities and look for reviews that address such concerns.

Learn about review plugins here.

Top Strategies for Effective Use of Reviews and Ratings:

1. Encouraging Negative Reviews for Improved Trust and Transparency

It’s a common behavior among online shoppers to scrutinize negative reviews meticulously, often considering them more seriously than positive ones. This inclination stems from the need to address concerns and skepticism that naturally arise during the decision-making process. Negative reviews, when specific and detailed, play a crucial role in building trust and transparency, guiding customers towards more informed choices.

Specific negative reviews offer customers the opportunity to connect the dots, enabling them to relate to the concerns raised. This connection becomes a pivotal factor in establishing trust, particularly when customers can ascertain that the issues highlighted in negative reviews are not applicable to their situation.

Typically, negative reviews focus on specific aspects that went awry during the purchasing experience. For reputable products and brands, this list is often not extensive. It might involve occasional peaks in customer service busyness, holiday-related delivery delays, or the timely resolution of a high-severity application bug. Importantly, these issues are usually time-bound and don’t persist throughout the entire year.

However, in cases where a product exhibits fundamental flaws, confusion, malice, or intricate complexity, customers become vigilant for potential red flags. The absence of negative reviews altogether could be perceived as a red flag itself, possibly concealing critical underlying issues.

Notably, not every negative review is universally negative. Customers tend to seek feedback from individuals with similar circumstances, focusing on issues relevant to their needs. For instance, concerns about shipping packaging for overseas orders or outdoor visibility might be less critical for a customer ordering domestically for home use. These issues pale in comparison to more severe concerns like extreme bugginess, poor customer support, major refund troubles, or cancellation issues.

To foster trust and transparency, businesses should consider encouraging customers to share honest negative reviews, emphasizing specificity about the disliked aspects of their experience. Prompting customers to clarify if and how the issues were resolved in the end adds valuable context. Incentivizing users with bonus points or coupon codes for their next billing cycle or purchase serves as a proactive strategy to elicit candid feedback and ultimately enhance the credibility of the brand.

2. Always Display Decimal Ratings Alongside the Number of Reviews for a More Comprehensive Assessment

In the realm of online shopping, customers heavily rely on precise evaluations of experiences shared by fellow consumers. However, many websites fall short in providing detailed insights, often opting for bright orange stars as visual indicators of overall satisfaction. While these stars convey a general sense, they lack the specificity needed to differentiate between nuanced experiences.

The issue arises from the aggressive rounding of scores, leading to the loss of essential details between distinct rating levels such as “4 stars” and “5 stars.” Stars alone fail to offer adequate context, leaving users unable to discern specific aspects liked or disliked, gauge satisfaction among users with similar profiles, or understand the product’s particular strengths and weaknesses.

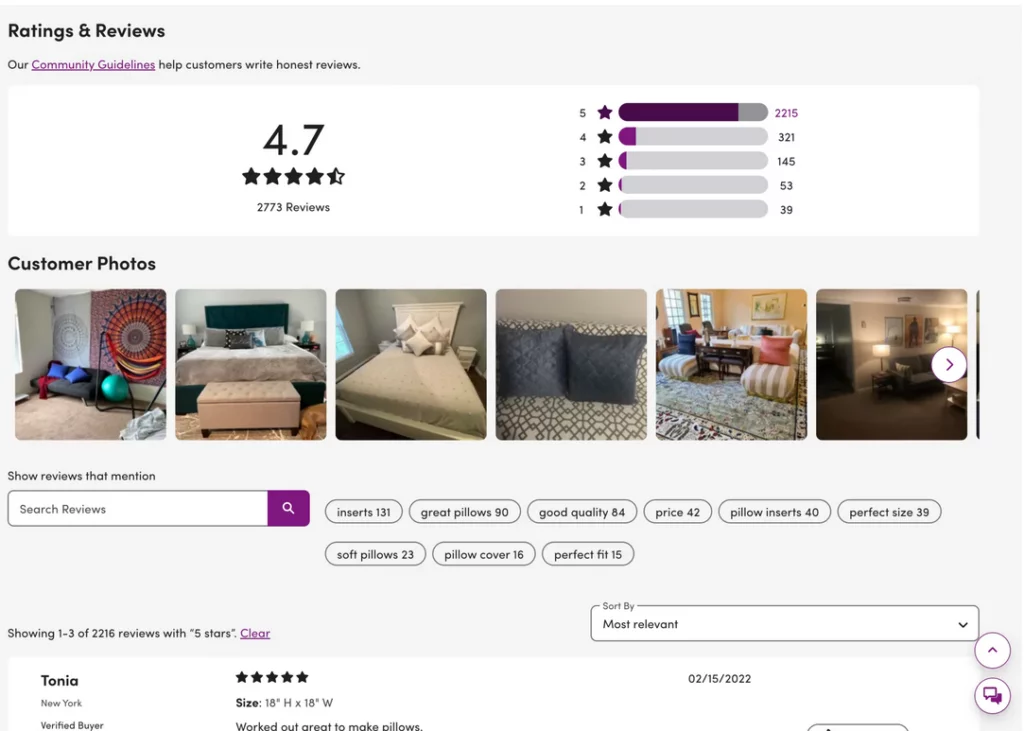

To enhance the assessment process, it is advisable to incorporate a decimal average score (e.g., 4.7 out of 5) along with the total number of reviews (78 reviews). The decimal score provides a more refined estimate, while the total number of reviews ensures a substantial pool of contributors to the overall score.

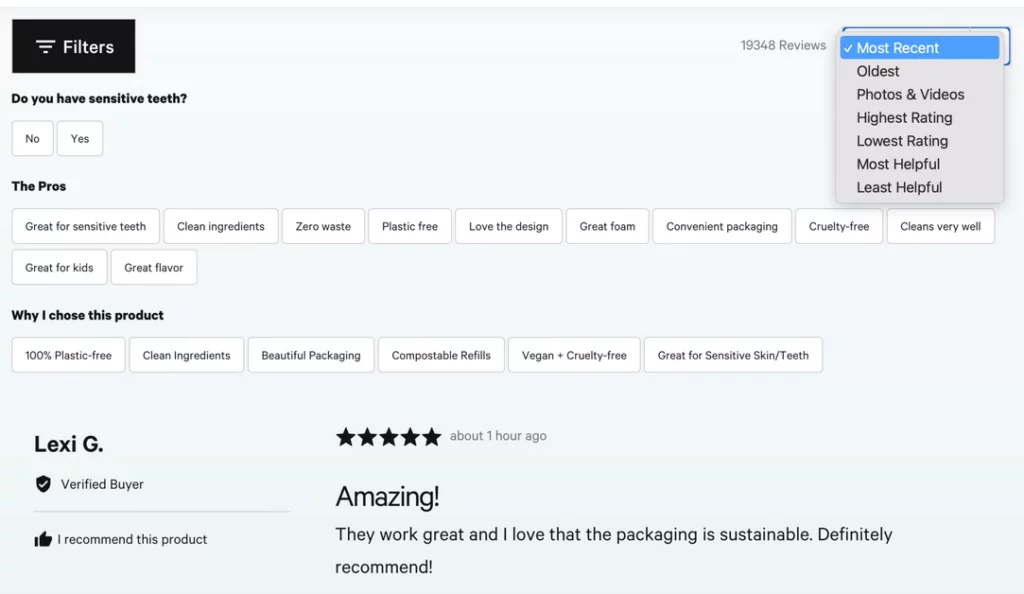

For instance, Bite.com effectively showcases the total number of reviews (19,348) without revealing an average score. In cases where the product accumulates an overwhelming number of positive reviews, displaying the average score may become less critical.

The significance of the number of reviews cannot be overstated. Usability testing indicates that customers often prefer products with 4.5-star averages backed by a higher number of reviews over perfect 5-star ratings with fewer reviews. The majority of customers would choose a product with a higher number of ratings despite a slightly lower average.

Consider the example of two identical products where one has a 4.5 ranking based on 180 reviews, and the other boasts a 4.8 ranking with 39 reviews. The majority of customers are likely to opt for the first product due to the higher number of ratings, underscoring the importance of combining the number of ratings and the average score to avoid biases toward products with better rankings but fewer reviews.

As for the perfect score, products with scores between 4.5 and 4.89, coupled with a substantial number of reviews (75+), are generally competitive. Beyond 4.9, customers may become skeptical, scrutinizing for potential traps or undisclosed flaws. Achieving an average user rating beyond this point may be counterproductive, as it is rare in real-life scenarios and may arouse suspicion unless based on a very small and possibly biased sample of reviews.

3. Present a Comprehensive Rating Distribution Summary for In-Depth Insights

In conjunction with the familiar bright yellow stars, the utilization of distribution summaries for ratings has become a pivotal aspect of the online shopping experience. These summaries offer a nuanced understanding of the relationship between high and low-score reviews, providing customers with a detailed overview of the product’s average quality for the majority of users. They enable users to quickly assess whether the product garnered overwhelmingly positive or negative feedback.

The efficacy of rating distribution summaries lies in their ability to reveal distinct patterns that users instinctively seek. Users swiftly eliminate options with an imbalanced number of low-score reviews or an excess of mid-score reviews. Conversely, products lacking any low-score reviews are often disregarded.

The ideal distribution pattern, which instills trust and garners optimal performance, is known as the J-shaped distribution pattern in user research. This pattern entails a substantial majority of 5-star reviews, followed by a significant number of 4-star reviews, and ultimately complemented by a sufficiently large (yet not excessive) number of low-score reviews. Crucially, this distribution should include enough negative reviews to provide customers with insights into the most adverse experiences they might encounter.

While the J-shaped distribution pattern is often perceived as ideal, it conceals certain challenges for businesses. It creates an expectation for a specific distribution that may not always align with the product’s true performance, potentially posing difficulties for businesses striving to meet these predetermined patterns. As a result, businesses should navigate these expectations carefully and aim for genuine representations of customer experiences to build trust and credibility.

4. Challenges of the J-Shaped Distribution Pattern

The distinctive name of the J-shaped distribution pattern stems from its visual resemblance to the capital letter “J.” This distribution exhibits a small spike in the most negative reviews (★), a flat middle ground (★★, ★★★), and a prominent spike in the most positive ones (★★★★★). It deviates from the conventional bell curve that might be expected in typical distributions.

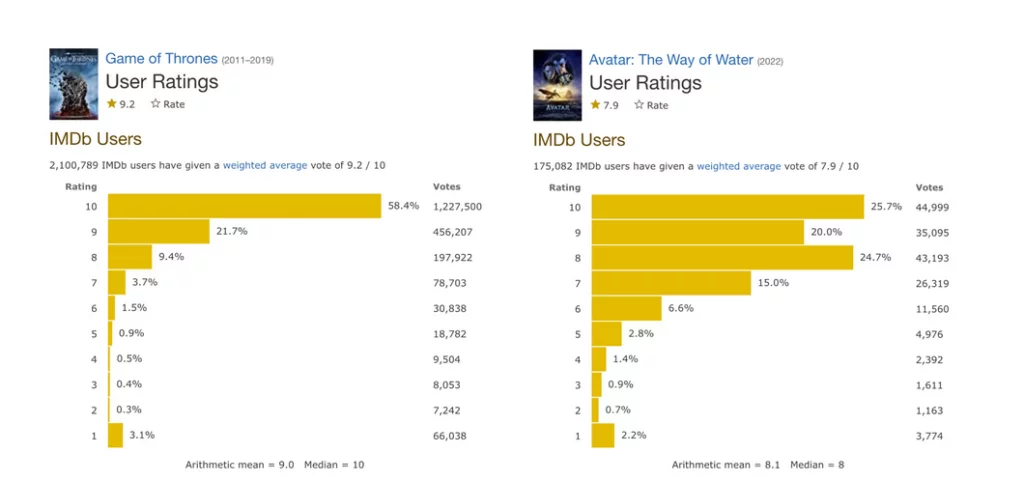

Examining the IMDB scores for “Game of Thrones” and “Avatar: The Way of Water” provides a practical illustration. In “Game of Thrones,” the distribution of top scores is clearly defined, while “Avatar: The Way of Water” exhibits a less conclusive distribution despite stronger negative ratings. This difference highlights the potential performance variations between products.

Furthermore, both examples emphasize that the lowest score (Rating 1) tends to receive disproportionate attention, reflecting the characteristics of the J-shaped distribution. Why does this distribution occur?

According to Sav Sidorov and Nikita Sharaev, user feedback often skews toward the extremes. Individuals who are moderately satisfied may not engage in leaving a review, while those who are extremely pleased or dissatisfied are more inclined to share their opinions. This bias makes rating scores susceptible to the strongest opinions at the extremes, leading to potential inaccuracies.

Sav has proposed an alternative design featuring four options (↑, ↓, ↑↑, and ↓↓), allowing customers to click or tap multiple times to express a stronger assessment. Another potential solution involves introducing a delay for reviews, as observed on Etsy, where customers must wait a week before providing a review, ensuring a more comprehensive product experience.

While these solutions may offer improvements, the key lies in providing context to explain the distribution. Breaking down the rating distribution summary by product attributes can be instrumental in offering a nuanced understanding of customer sentiments.

5. Enhancing the Rating Distribution Summary with Specific Product Attributes

While a distribution summary offers a comprehensive overview, customers often face challenges in extracting insights about specific product attributes, such as battery life or feature sophistication. To address this, a more refined approach involves breaking down the distribution summary further and highlighting average scores for individual product attributes, empowering customers with targeted information.

For instance, customers can provide feedback on distinct qualities like appearance, value for money, product quality, battery life, and more. By prompting users to evaluate these specific attributes during their feedback, separate average scores can be calculated for each, offering a more nuanced understanding of the product’s performance.

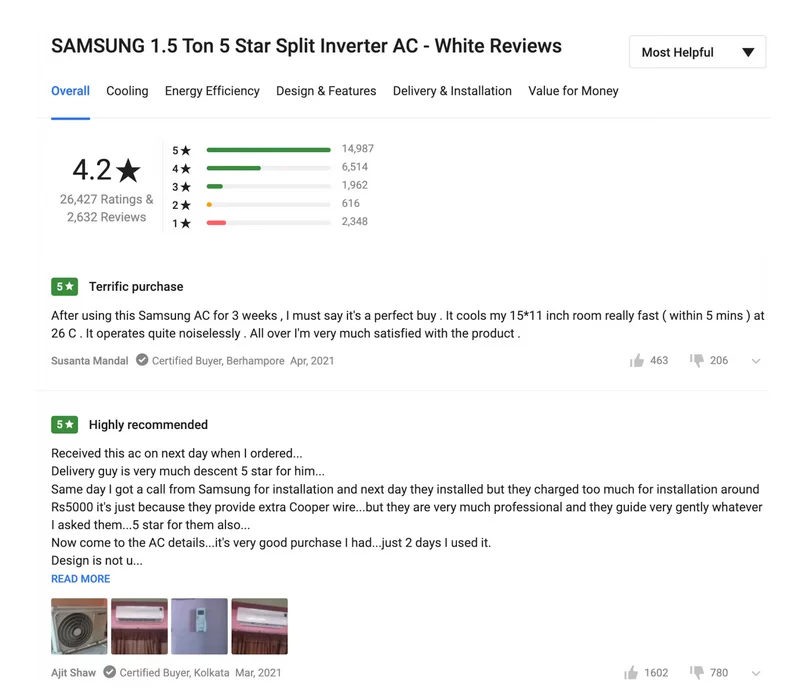

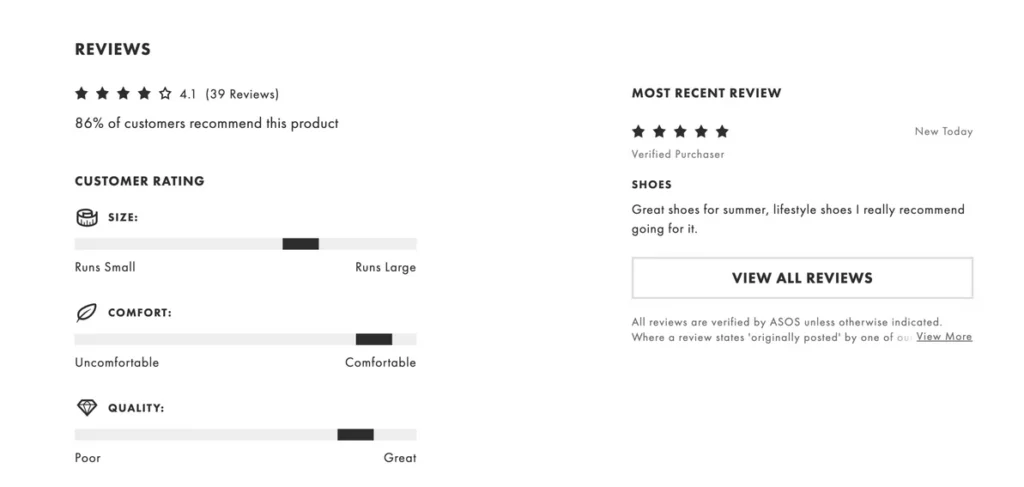

Flipkart, as depicted above, encourages customers to provide feedback categorized into specific product attributes relevant to the item. While the color coding denotes positive and negative reviews, improvements in accessibility and filtering options could enhance the user experience.

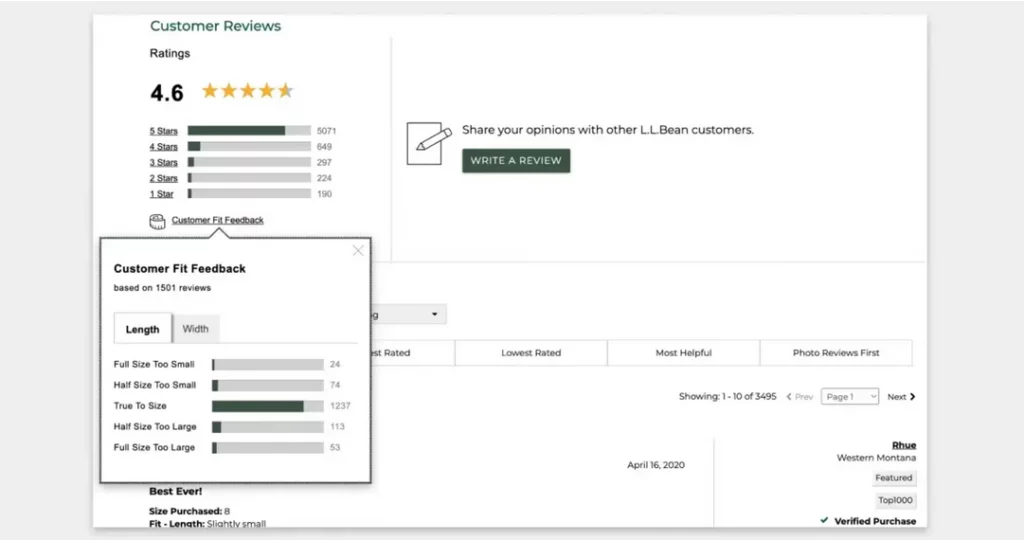

Adidas and LL Bean adopt a similar approach, allowing customers to delve into ratings and reviews for specific qualities such as comfort, quality, fit, and support. Users can explore detailed insights, whether in the form of a position on a scale or an additional distribution summary. These patterns provide clarity on how well a product fares in relation to particular attributes, catering to the diverse preferences of customers.

6. Enriching User Reviews Through Recommended Tags

While product attributes play a crucial role, they may not capture the entirety of the user experience. Even a well-designed product may not suit every customer’s needs, making it essential to provide additional insights beyond standard specifications. To facilitate this, suggesting relevant tags during the review-writing process can significantly enhance the value of user feedback.

These suggested tags could encompass a range of aspects, from general qualities like “great fit,” “ideal for kids,” and “easy to use” to more personalized and individualized descriptors. The aim is to capture insights not only about the product but also about the customers who have already made a purchase. By doing so, prospective buyers can easily relate to existing customers, finding commonalities that enhance the relevance of published reviews.

Personal details might include usage frequency, experience level, age range, or current location, tailored to the nature of the product. For beauty products, for instance, inquiries could extend to preferred look, skin type, shade, glow, scent, facial characteristics, texture, and typical makeup type, a practice adopted by platforms like Glossier and Sephora.

Displaying these tags as additional rating filters facilitates quicker access to relevant reviews for customers, allowing them to explore scores and feedback for critical attributes based on the experiences of users with similar preferences. This personalized approach proves more valuable than generalized feedback based solely on averages.

7. Spotlighting Social Validation Through Recommendations in Reviews

In addition to introducing suggested tags, another impactful inquiry we can pose to existing customers towards the conclusion of their reviews is whether they would recommend the product to friends, colleagues, or even strangers. This query leads to the calculation of a significant metric often overlooked but with the potential to be a game-changer: the recommendation score.

As exemplified by Asos, showcasing the percentage of customers who recommend the product, such as “86% of customers recommend this product,” offers a distinct perspective compared to conventional 5-star or 1-star ratings. Even customers moderately satisfied, perhaps leaning towards a 3-star rating, might still wholeheartedly recommend the product to others, emphasizing their contentment with its overall quality.

Emphasizing the number of customers recommending the product is a valuable strategy. A commendable benchmark is achieving a recommendation score above 90%, but exceeding 95% may raise suspicions.

To amplify the impact, specifying the customer segment endorsing the product and allowing users to select the group most relevant to them can further enhance the effectiveness of this approach. Incorporating nuanced details like experience level, frequency of use, or project type ensures a tailored and compelling statement, such as “86% of customers (5+ years of experience, enterprise-level) recommend this product,” resonating strongly with individuals fitting that specific group.

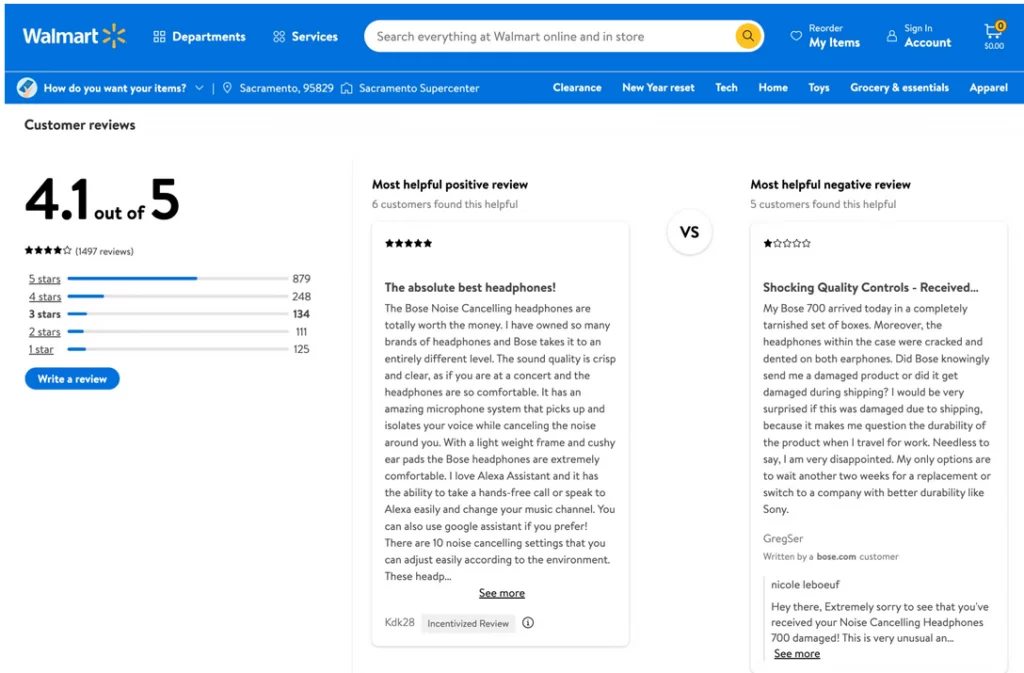

8. Enable Users to Highlight Helpful Reviews

Not all reviews are equally informative or beneficial. Some may lack specificity, while others focus on irrelevant details. To ensure that the most relevant reviews rise to the top of the list, users can be encouraged to mark reviews as helpful, irrespective of whether they are positive or negative.

Glossier.com

Highlighted reviews, encompassing a variety of perspectives, can be featured prominently at the beginning of the reviews section, accompanied by the count of users who found them helpful. This practice significantly enhances credibility and expedites the path to relevant information, as these highlighted reviews have garnered validation from other customers, instilling authenticity and trust.

9. Offer Comprehensive Sorting Options and Introduce Search Autocomplete

Beyond merely allowing users to filter reviews based on a specific rating score, providing more nuanced sorting options is crucial. For instance, after applying a filter, users can sort reviews based on specific criteria. On Bite, users have the flexibility to sort by review date, the presence of photos and videos, as well as highest and lowest ratings, along with the most and least helpful reviews.

Wayfair.com provides slightly more refined sorting options, including customer photos, most helpful, and latest reviews. Options like sorting by least helpful or oldest reviews may be less utilized by users.

Moreover, platforms like Glossier and Wayfair extend sorting capabilities to include reviews with customer photos and offer a search within reviews feature. Implementing an autocomplete feature in the search function can enhance user experience. Wayfair (shown below) not only displays product tags and customer photos but also emphasizes the frequency of keywords mentioned in reviews, aiding users in quickly identifying pertinent information.

Conclusion:

Achieving an effective user rating system demands careful consideration and implementation. The process involves not only collecting reviews but also emphasizing those that are pertinent to potential customers. Distribution charts, featuring both the number of ratings and decimal averages, provide a more nuanced understanding of the product’s overall performance.

To enhance the user experience, incorporating filters, tags, and search functionalities enables users to swiftly locate reviews from individuals with similar preferences and needs. As we navigate the intricacies of user ratings, it’s crucial to strike a balance between showcasing the diversity of opinions and highlighting the most helpful and relevant feedback.

FAQs on Reviews and Ratings:

What role do suggested tags play in user reviews?

Suggested tags in reviews offer a more personalized and detailed understanding of a product’s qualities. These tags, ranging from product-specific attributes to personal preferences, assist users in finding reviews that resonate with their unique needs.

Why is it important to break down the distribution summary by product attributes?

Breaking down the distribution summary by specific product attributes, such as appearance, value for money, or battery life, provides users with a more detailed assessment. This approach offers a tailored view of how a product performs in areas that matter most to individual users.

How can social proof be highlighted in user reviews?

Social proof in reviews can be emphasized by incorporating a recommendation score, indicating the percentage of customers who recommend the product. This metric goes beyond star ratings, offering insights into overall satisfaction and real-world endorsements.

What sorting options enhance the usability of user reviews?

Comprehensive sorting options, such as sorting by review date, the presence of photos, and helpfulness ratings, contribute to a more user-friendly experience. Users can efficiently navigate through reviews based on their specific preferences and criteria.

How can search autocomplete improve the efficiency of finding relevant reviews?

Search autocomplete features within reviews facilitate quicker and more accurate searches. Users can explore specific keywords or attributes mentioned in reviews, streamlining the process of finding information that aligns with their interests.